Sigma SD10, Sigma 70-200/2.8 EX @ 200mm, 1/200 @ f/6.3

A digital camera's image resolution, as measured in megapixels (millions of pixels), is what most people view as the primary indication of image quality. Manufacturers play to this perception, releasing cameras with increasingly greater megapixel ratings. However, there's more to image quality than the sheer number of pixels comprising that image.

All megapixels are not created equal. Sensor size, photodetector size, and sensor technology all play a role in determining image quality, or how closely the image reflects the scene that we attempted to capture. I'll explain why in a moment.

First, lets get some terminology straight. A pixel is shorthand for picture element, and refers to one of perhaps millions of colored 'dots' that make up an image. A photosite is a more precise way of saying a particular location on an image sensor that can record a light value. Photosites may have one or more photodetectors, or light sensing areas. Pixels and photodetectors are used interchangeably, but they are not necessarily so because most image sensors create a pixel by calculating its value from several adjacent photodetectors, each occupying a photosite in a two-dimensional array, while some image sensors can record multiple colors at the same X-Y photosite location via a stack of photodetectors (the Foveon sensor is the most widely-know of this type). And finally, let's define image quality as a coefficient that relates the RGB value of a single pixel compared to the value that should have been captured if the sensor was perfect in terms of capturing the RGB value. In other words, the highest quality image would have the actual RGB value captured at each photosite match the resultant pixel on the image.

Next, let's discuss how image sensors work. There are three main digital imaging sensor technologies in use today. We'll ignore the scanner backs used on medium- and large-format cameras (interesting technology but for very limited use), and look at the two variations of 'one-shot' digital imaging sensors: the Color Filter Array (CFA) sensor and the Foveon® sensor.

The most widely-used sensor technology is the Color Filter Array (CFA) sensor comprised of a two-dimensional array of photosites with a color filter array on top. Since photosites are monochromatic in nature the CFA sensor puts a pattern of color filters on top of the sensor so each photosite is only sensitive to one of the primary colors (red, green, or blue). The most commonly used CFA is the Bayer pattern, of arranging the color filters in a repeating Red-Green-Blue-Green order, and most digital image sensors used in cameras today are Bayer CFA sensors. Bayer sensors have one obvious limitation; the CFA filter assures that 25% of the photosites will detect only shades of red, another 25% will detect only shades of blue, and the remaining 50% will detect only shades of green. Most CFA sensors have an anti-aliasing optical filter that slightly blurs fine detail in an attempt to minimize moire at the expense of fine detail. A Bayer sensor's resolution varies depending on the color composition of the scene: best for black and white, better for green hues, not so good if red and blue are the predominant colors. Figure on about 60% to 70% of the theoretical resolution based on pixels per mm of sensor, or about 45 line pairs per millimeter (45 lp/mm) on the Nikon D70 and other 6 MP Bayer sensor-equipped digital cameras.

The second image sensor type used in dSLRs and digicams (point-and-shoot digital cameras) is the Foveon sensor. Unlike CFA sensors, the Foveon sensor has a three-dimensional photosite structure, with three photosensors stacked at each photosite (one for red, one for green, and one for blue). The founders of Foveon discovered how to to use silicon as a color filter of sorts, taking advantage of how deeply each primary color penetrates. What this means is that the image reconstruction process is as simple as taking the RGB value at each photosite and writing it out to an image file. No interpolation occurs; what you see is what you get until you are limited by either lens or sensor resolving ability. On the Foveon X3 second-generation sensor, that would equate to about 50 line pairs per millimeter (50 lp/mm).

Wait a minute! You're saying that a 3.4 MP camera has better resolution than a 6.1 MP camera? You're kidding!

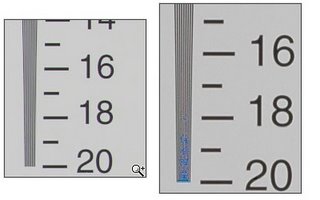

No, I'm not kidding. That is exactly the point: more pixels in an image don't mean a higher-resolution image if the extra pixels do not contain valid image data but instead reflect interpolated, "guessed" data that may or may not accurately reflect what was in front of the camera. Here's a comparison of resolution test pictures from the Foveon-equipped Sigma SD10 and the Bayer-equipped Nikon D70:

This black & white resolution chart is the best case test for a Bayer sensor because every photodetector either sees no light or some light and the color isn't important, yet the D70 and the SD10 have about equivalent resolution. The Bayer sensor's performance is dramatically diminished if resolution charts that are red, or blue, or green, on a white background are used. You can also notice the multicolor aliasing (moire) on the D70 image, a result of interpolation (guessing): certain photosites capture a boundary between black and white and because of the color filtering this is interpreted as different hues.

(image courtesy of Digit Life)

Let's compare image quality from different-sized sensors with the same number of photosites. Obviously, smaller sensors with equivalent resolution will have physically smaller photosites. What does this mean in terms of picture quality? Generally speaking, sensors with larger photosites deliver images with less noise (a 'grainy' look), because while the electronic circuitry inside an image sensor will occasionally generate random photons that emulate light striking the photosites, the number of photons captured per photosite over a set time is greater when the photosite is larger and thus the signal-to-noise ratio (SNR) is higher. This is why high ISO images taken with digicams (compact point-and-shoot digital cameras) with smaller sensors display much more noise than their larger-sensored digital SLR (dSLR) brethren. It is also why a 6 MP image taken with a dSLR nearly always looks better than the same image taken with a digicam. And, it is why some digicams with 6 MP sensors actually provide better image quality than a nearly identical camera by the same manufacturer with a higher megapixel rating.

How do you get the most resolution out of your digital camera regardless of its megapixel rating or sensor design?

- Set your camera to save images in a lossless format (raw or TIFF) instead of JPEG

- Set your camera to its highest resolution rating

- Set your camera to the lowest ISO speed that will allow you to successfully capture pictures in your situation

- Finally, for the ultimate in image quality, do your post-exposure image processing (sharpening, etc.) in an image editor such as Photoshop or Picture Window Pro (my favorite) instead of the camera

No comments:

Post a Comment